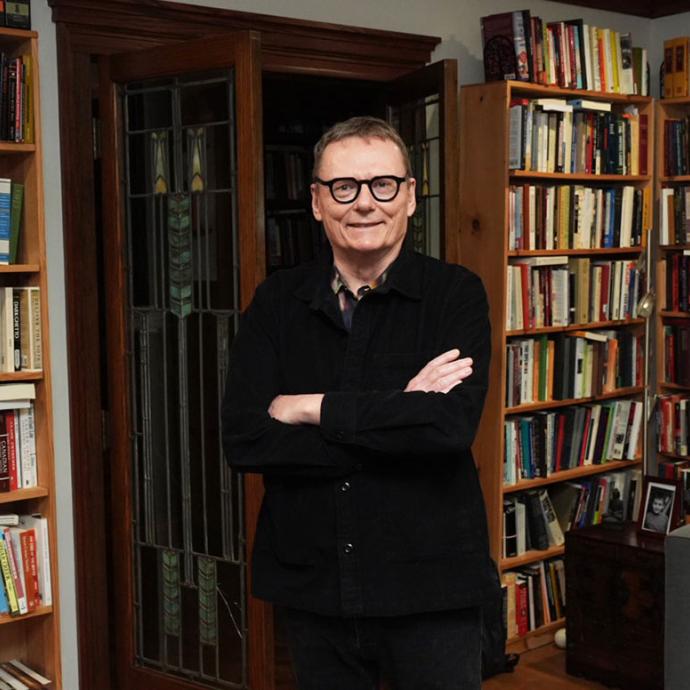

Prof. James Evans is building a treasure map. Buried underneath layers of data, he believes, are golden discoveries that could help us solve some of the world’s biggest problems—from climate change to cancer research.

The National Science Foundation has awarded the research team, led by Evans, a $20 million grant to create first-of-their-kind large language models (LLMs) intended to help predict—and strategically direct funding to—scientific discoveries and technological advancements. In partnership with the Allen Institute for AI, the team also includes University of Chicago researchers Prof. Ufuk Akcigit, Prof. Ian Foster and Ben Blaiszik.

“We're trying to help the government and researchers anticipate what are new and important things in science and technology,” said Evans, the Max Palevsky Professor in the Department of Sociology and Data Science at UChicago and director of the Knowledge Lab. “And how they can allocate their resources to focus on the most impactful and probable things.”

LLMs, like Chat GPT-4, are multilayered neural networks—meaning they are designed to “think like humans.” To produce helpful, “humanlike” responses, these models analyze everything on the internet to predict what information should come next. However, according to Evans, these models don’t have a great sense of time.

“Current models like ChatGPT use the web, mixed together, as data. As such, they don’t have a sense of what occurred in 2023 that was surprising with respect to 2022,” Evans said. “They don't know what things were surprising or radical.”

By mapping funded research proposals, scientific papers, and their resulting patents and products, the team plans to build models that are “chronological.” These time-aware models will hopefully allow researchers to predict or recognize disruptive advances the moment they occur. These models will also identify how such discoveries and inventions change the landscape to reveal new, follow-on opportunities. Policymakers could then use this data to guide funding and talent toward these potential discoveries.

Funding—like time and attention—is limited. In the U.S., the majority of science funding is distributed by the government, who, according to Evans, tends to fund research that is widely expected to yield high-value results. Nevertheless, Evans likens this risk-averse strategy to bunting down the first base line.

“We can’t just afford to get on base,” he said. “Major advances and opportunities—we're only going to get to some of those things within our lifetime by swinging for fast balls, for improbable opportunities.”

By guiding policymakers and the public to research areas with high potential (which might have been overlooked by human researchers and referees), the team hopes to diversify our science portfolio—encouraging funders to invest in a healthy mix of risky and non-risky research, and across a wide variety of risks.

Building upon these same models, the research team will also construct a “virtual laboratory” to simulate potential outcomes in the worlds of science and technology. For example, what happens if we fund a certain area of research? Or enact a particular policy? Or partner with a particular country?

The project will be built and tested over the course of five years, in stages, though the team expects to see insights relatively soon.

“Science and technology are the engine which drives human flourishing,” Evans said. “And we need to flex our complete scientific and technological imagination to manage and lead it.”

To learn more about the project and related opportunities, visit the Knowledge Lab website.

—Prof. Jacqueline Stewart

—Prof. Jacqueline Stewart